In the ever-evolving arena of graphics technology, industry titans like AMD and Nvidia are constantly pushing boundaries, investing significantly each year into pioneering advanced rendering techniques. This relentless pursuit seeks to elevate the performance of current hardware or to architect the graphics chips of tomorrow. Highlighting this dedication, a recently approved patent from AMD (United States Patent Application 20250005842) reveals the company’s exploration into neural networks for ray tracing, a journey that commenced at least two years prior to its June 2023 submission and subsequent approval in January of this year. The discovery of this patent, along with others concerning BVH traversal and compression, underscores AMD’s forward-thinking strategy. The neural network patent is particularly significant, given its timeline and probable reliance on cooperative vectors—an innovative extension to Direct3D and Vulkan that empowers shader units to leverage matrix or tensor units for processing compact neural networks.

AMD’s Patented Neural Network Ray Tracing: A Glimpse Inside

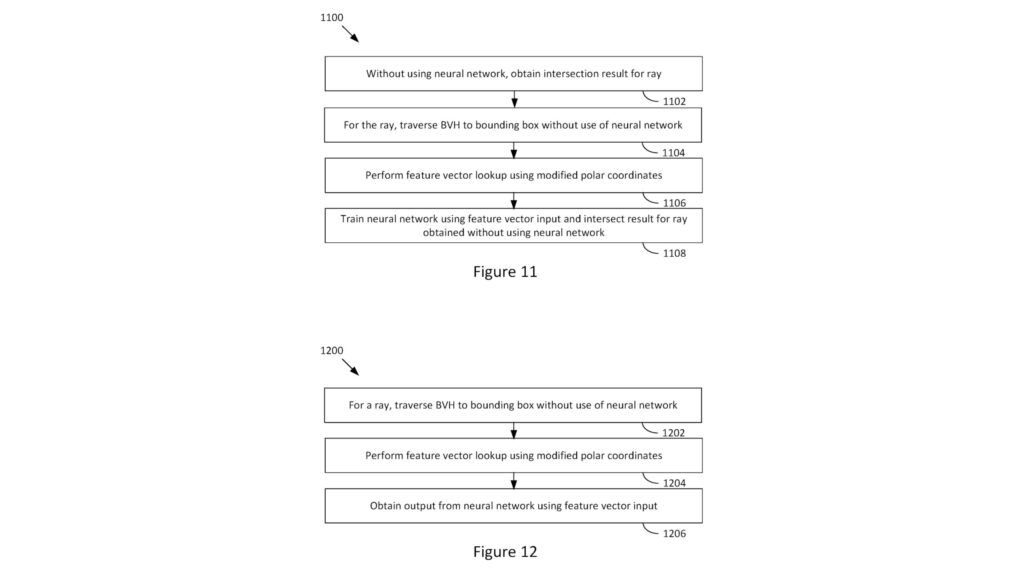

So, how does this patented technology work? AMD’s filing outlines a sophisticated technical process for neural network-based ray tracing. Its primary goal is to determine if a ray, cast from the camera’s viewpoint in a 3D scene, is obstructed by an object it encounters. The method initiates with a Bounding Volume Hierarchy (BVH) traversal, methodically navigating the scene’s geometry to detect interactions between the ray and bounding boxes. Upon detecting an interaction, the system performs a “feature vector lookup using modified polar coordinates.” These feature vectors, a core concept in machine learning, are essentially numerical summaries of the properties of the objects or activities being analyzed. Subsequently, shader units execute a small neural network, fed by these feature vectors. The network’s output is a clear binary decision: is the ray occluded, or does it have a clear path? An accompanying diagram in the patent documentation vividly illustrates both the training phase for this neural network and its integration into the rendering pipeline, offering a fascinating look into potential future graphics processing.

AMD’s Enduring Commitment to Neural Rendering Innovations

The emergence of this patent highlights AMD’s deep and sustained investment in ‘neural rendering.’ This commitment isn’t a recent reaction to industry buzz, like the anticipation surrounding Nvidia’s RTX 50-series GPUs, but rather a long-term strategic vision. It’s crucial for us at Digital Tech Explorer to emphasize that patent submission dates (June 2023, in this instance) seldom mark the actual commencement of research; such intricate technological advancements gestate over many years. AMD’s work in this domain is a testament to proactive, long-range research, not a belated attempt to catch up. This pattern is historically supported: an AMD patent for a hybrid ray tracing procedure that became public in 2019 was, in fact, initially submitted back in 2017. While pinpointing direct utilization can be challenging, the RDNA 2 architecture, launched in late 2020, utilized a remarkably similar setup, perfectly illustrating the extended lifecycle from research to productization in the tech world.

Inspired to Upgrade? Explore Digital Tech Explorer’s Guides

Cutting-edge developments like these often spark the desire for a hardware upgrade. At Digital Tech Explorer, we’re committed to helping you navigate the choices and make informed decisions. Dive into our expert reviews and curated recommendations:

Best Gaming PCs: Discover our top picks for pre-built machines, meticulously reviewed and tested for unparalleled performance. We focus on transparency and real-world testing to ensure you get the best.

Best Gaming Laptops: Explore powerful and portable gaming laptops, ideal for high-quality experiences on the move. Our selections are backed by thorough research and hands-on evaluation.

Please note: Some links in our guides may be affiliate links. This means Digital Tech Explorer may earn a commission if you click through and make a purchase, at no additional cost to you. Our recommendations are always based on our honest, independent assessments and thorough research. For more information, please see our affiliate disclaimer.

The Dawning Era of AI in 3D Graphics Rendering

The diligent exploration of AI in rendering, as evidenced by AMD’s recent patents, strongly suggests a transformative future role for artificial intelligence in the realm of 3D graphics. While patent documents don’t guarantee specific product features or definitive performance leaps—so we might not immediately see a hypothetical Radeon RX 9070 XT (a name that might one day grace our GPU news and reviews) explicitly running these neural networks for ray tracing enhancement (as implementation also hinges on game developer adoption)—the underlying trajectory is unmistakable. Companies like AMD are channeling significant resources into AI for graphics. Why? Because traditional performance scaling methods, such as cramming billions more transistors and hundreds more shader units onto chips, are yielding diminishing returns relative to their escalating costs. AI presents a compelling pathway to overcome this challenge. This is precisely why upcoming architectures, like the anticipated RDNA 4 GPU, are expected to feature dedicated matrix units tailored for such AI computations. Ultimately, if AI can enable games to run faster, look more stunning, or ideally, achieve both, its integration into 3D rendering pipelines is not just an advancement—it’s an inevitable and welcome evolution for tech enthusiasts and developers alike.